Master Llama 3.1 function calling with our step-by-step Python tutorial. Learn to use the new Meta Llama 3.1 API to build responsive, tool-using AI agents. Complete code included.

The Power of a Model That Can Do Things

Since Meta rolled out the Llama 3.1 update, developer enthusiasm has remained exceptionally high. This excitement exists for an important reason.

The previous situation existed in which large language models functioned as knowledgeable figures confined within a locked chamber.

Large language models need big data training to provide abundant information yet they lack abilities which exist in physical reality.

The technology possesses no capability to handle weather forecasts and flight bookings and email sending; its abilities stop at delivering information about these functions.

Llama 3.1 establishes an outstanding function calling feature which operates as a solution. It functions as the essential key that grants access to the doorway.

The model obtains “tools” through function calling which establishes external world connections via your provided code.

The model advances from basic chatbot functionality into advanced reasoning engine capabilities which operate as an AI agent because of this development.

The guide provides you with exact instructions to achieve the implementation.

A step-by-step Python tutorial will assist you in understanding the new Meta Llama 3.1 API.

When you finish this tutorial you will gain knowledge about building AI agents which go beyond conversational functions by performing tasks.

Table of Contents

Understanding the Core Concept: Giving Your LLM Tools

What is function calling? The most straightforward way to understand function calling works through this example.

You have an outstanding research assistant who knows everything ever written.

The assistant possesses high intelligence yet remains trapped in a windowless room without internet access.

The assistant provides flawless answers about historical events because they have complete knowledge of the past.

The assistant cannot provide any information about current weather conditions because they lack access to the outside world.

The research assistant receives a tool kit which contains a phone with one weather service number and instructions to use it when people ask about weather conditions in different cities.

The assistant uses the phone to contact the weather service and gets the requested weather information before reporting back to the person who made the query.

Function calling operates in exactly this manner. We provide the AI system with specific operational tools (our coded functions) together with explanations about how these tools function.

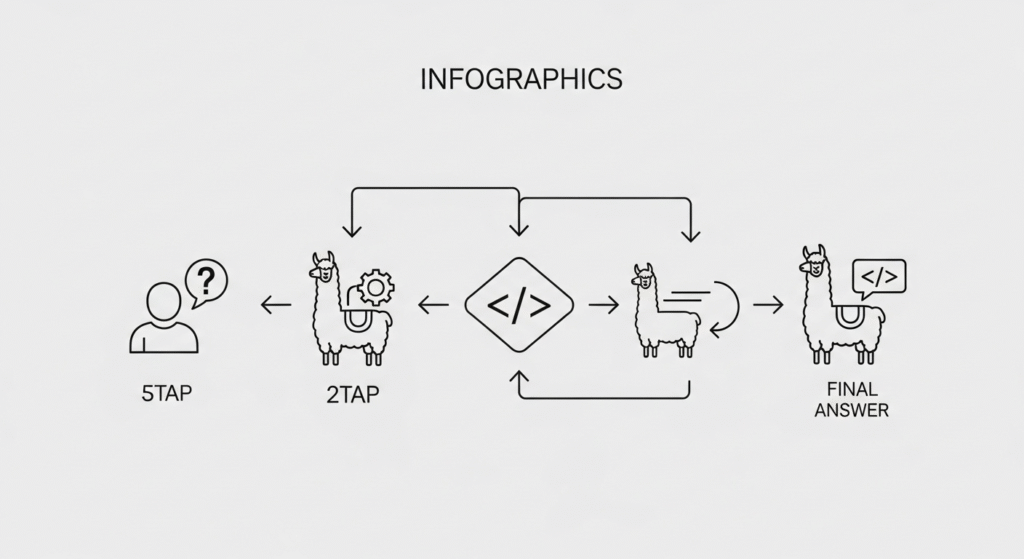

Technically speaking, it’s a multi-step process:

- Llama 3.1 accepts your prompt such as “What’s the forecast for London this evening?”

- The model detects its internal knowledge lacks the answer so it identifies the tool you provided in the prompt.

- The model generates a structured command that says: “I need to run the get_weather function with the parameter location: ‘London’. “

- Your application reads this command to execute your actual get_weather code which might contact a real weather API to obtain the result.

- The result is returned to Llama 3.1.

- The model uses its fresh real-world data to generate an accurate natural language response for you which states “The forecast for London this evening is partly cloudy with a temperature of 15°C.”

Why Llama 3.1’s Version is a Game-Changer

Meta’s Llama 3.1 model represents a significant advancement in function calling which excites developers about its potential.

Llama 3.1 achieves better accuracy when determining tool usage and tool selection among multiple options.

The model shows improved precision when determining correct parameter values which leads to better performance from AI agents.

Speed is critical. The design of Llama 3.1 enables quicker decisions about function calls. The faster response time produces a more interactive experience that feels responsive to you.

Smarter, Multi-Turn Tool Use: This is a huge advantage. The conversation history of tool calls remains accessible to Llama 3.1.

The model understands that it needs to execute the get_weather function again when you ask about New York weather conditions. This enables more sophisticated and humanlike interactive communication.

What You’ll Need to Get Started

Before we dive deep into the fun part, let’s quickly gather the tools that required to get started. Don’t worry, the setup is very simple. Here’s a simple checklist of what you’ll need:

- Python (version 3.8 or newer): Most modern computers already have a compatible version of Python installed.

- A Meta Llama 3.1 API Key: This is your personal pass to use the model. You can get one from the official Meta AI developer page.

- Basic Python Knowledge: You don’t need to be a guru! Just being comfortable with running a simple Python script is enough to follow along.

- A Code Editor: Use whatever you like best. We’ll be showing examples that work perfectly in a popular free editor like VS Code.

Installing the Necessary Python Libraries

Our tutorial requires only one library, which is a positive development. Python programmers rely on the widely used library requests to establish communication between their code and online APIs.

You can begin by entering this command in your terminal or command prompt:

# This library helps us communicate with the Llama 3.1 API pip install requests

And that’s it! With that one library installed, your environment is all set up. Now, let’s start building.

A Practical Example: Building a Real-Time Weather Agent

We will focus on practical implementation after discussing the theoretical aspects.

The project involves creating a basic but effective AI system which retrieves present weather conditions.

The example demonstrates every stage of function calling process beginning at the start and ending at the finish.

Step 1: Define Your Python “Tool”

We need to start by developing the actual “tool” which our AI will operate. The tool consists of a normal Python function which operates within our codebase.

The code execution belongs to us because Llama 3.1 will only instruct us about its appropriate execution time.

Our weather agent requires a function named “get_current_weather“.

# tools.py

import json

def get_current_weather(location: str, unit: str = "celsius"):

"""

Gets the current weather for a specified location.

"""

# This print statement is for us, so we can see when our function is being used.

print(f"--- Calling our function: get_current_weather(location='{location}', unit='{unit}') ---")

# In a real-world app, you would make an API call to a real weather service here.

# For this tutorial, we'll just pretend and return some sample data.

weather_info = {

"location": location,

"temperature": "22",

"unit": unit,

"forecast": "Sunny with a chance of clouds",

}

# We return the data as a JSON string, a common format for APIs.

return json.dumps(weather_info)Think of this function as the engine. It’s ready to do the real work, but it’s just sitting there waiting for instructions.

Step 2: Describe the Tool for the Llama 3.1 API

The upcoming section represents the most significant element of this topic.

Our function exists but Llama 3.1 remains unaware about its existence and operational procedures.

We must provide it with an “instruction manual.”

The AI requires this specially formatted description to gain complete understanding of the tool’s name and operational functions and the parameters needed for proper execution.

The structured description acts as a magic connection between your questions and your written code.

Following is the formatted instruction manual which describes our get_current_weather function.

# The tool "manual" that Llama 3.1 will read

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g., San Francisco, CA",

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The unit of temperature.",

},

},

"required": ["location"],

},

},

}

]Let’s quickly break that down:

- name: This must exactly match our Python function’s name.

- description: This is for the AI. It’s a simple, human-readable sentence that Llama 3.1 uses to figure out if this tool is the right one for the job.

- parameters: This lists the arguments our function needs. We’re telling the model that get_current_weather needs a location (which is required) and can optionally take a unit.

With this manual, Llama 3.1 is now fully equipped. It knows what tools are in its toolbox and exactly how to ask for them.

Step 3: Make the First API Call (User Prompt -> Model)

The tool has been created and its manual is complete.

The next step involves posing a question to Llama 3.1.

Your prompt along with the tools manual we created will be sent to Llama 3.1.

The model receives the question along with the special abilities that it needs to use for answering.

import requests

import json

# --- (You would have your tools and function definition from above here) ---

# Your API Key and the API endpoint

API_KEY = "YOUR_META_API_KEY" # IMPORTANT: Replace with your actual key

API_URL = "https://api.llama.meta.com/v1/chat/completions" # Example URL

# The user's question

user_prompt = "What's the weather like in Tokyo?"

# The API request payload

payload = {

"model": "llama-3.1-8b-instruct",

"messages": [

{"role": "user", "content": user_prompt}

],

"tools": tools, # This is our "instruction manual" from Step 2

"tool_choice": "auto" # We let Llama decide when to use a tool

}

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

# Let's make the first call!

print("--- Sending first request to Llama 3.1 ---")

response = requests.post(API_URL, headers=headers, json=payload)

response_data = response.json()

print("--- Received response from Llama 3.1 ---")

print(json.dumps(response_data, indent=2))Step 4: Interpret the Model’s “Tool Call” Response

The process starts here. Llama 3.1 will refuse to give textual answers if it requires our tool to function.

The model sends back specific instructions to tell us which function to execute and what arguments to use.

The code execution produces a JSON response which displays information about the weather report.

The output will show a JSON structure which resembles this: after executing the above code.

{

"id": "chatcmpl-...",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": null,

"tool_calls": [

{

"id": "tool_call_12345",

"type": "function",

"function": {

"name": "get_current_weather",

"arguments": "{\"location\": \"Tokyo\"}"

}

}

]

},

"finish_reason": "tool_calls"

}

]

}Look closely at the tool_calls part. Llama is telling us: “I need you to run the get_current_weather function and use Tokyo for the location.” Our application’s job is now to read these instructions and act on them.

Here’s how we can parse that response in Python:

# Extract the first tool call from the response tool_call = response_data[‘choices’][0][‘message’][‘tool_calls’][0] function_name = tool_call[‘function’][‘name’] function_args_str = tool_call[‘function’][‘arguments’] # The arguments come as a string, so we need to convert them to a Python dictionary arguments = json.loads(function_args_str) print(f”Llama wants to call the function: ‘{function_name}'”) print(f”With these arguments: {arguments}”)

Step 5: Execute the Tool and Send the Result Back

Our code now has its marching orders. Let’s execute the requested function and then report the result back to Llama 3.1 so it can give the you a final, natural-sounding answer.

This involves two parts:

- Running our actual get_current_weather Python function.

- Making a second API call, this time including the original prompt, the model’s decision to use a tool, and the result of that tool’s execution.

# First, let's actually run our Python function with the arguments Llama gave us

tool_output = get_current_weather(location=arguments['location'])

print(f"--- Our function returned: {tool_output} ---")

# Now, we build a new request to send the tool's output back to Llama

# We must include the history of the conversation so far

conversation_history = [

{"role": "user", "content": user_prompt}, # The original question

response_data['choices'][0]['message'], # The model's "tool_call" response

{ # The result of our tool

"role": "tool",

"tool_call_id": tool_call['id'],

"content": tool_output

}

]

# Create the new payload for the second API call

second_payload = {

"model": "llama-3.1-8b-instruct",

"messages": conversation_history

}

# Make the second API call

print("\n--- Sending second request with tool result ---")

final_response = requests.post(API_URL, headers=headers, json=second_payload)

final_response_data = final_response.json()Step 6: Receive the Final, Context-Aware Response

And now for the grand finale. After receiving the weather data from our tool, Llama 3.1 has everything it needs. The second API call will return a response containing a simple, conversational answer for you.

The final JSON response will look like this:

{

"id": "chatcmpl-...",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "The current weather in Tokyo is 22°C and it's sunny with a chance of clouds."

},

"finish_reason": "stop"

}

]

}As you can see, the content field now has the perfect answer. Here’s how to print just that part:

# Extract and print the final, human-friendly answer

final_answer = final_response_data['choices'][0]['message']['content']

print("\n--- Llama's Final Answer ---")

print(final_answer)And there you have it! You’ve successfully guided Llama 3.1 to use an external tool to find real-time information and formulate a perfect response.

A Full, Copy-and-Paste Example for AI Agent Development

We have gone through each step individually but the most effective learning method involves observing the entire process functioning in unison.

The following complete script contains all the elements we have discussed throughout this guide.

The code represents a complete executable program.

You should copy the code then enter your API key before executing it in your terminal. The detailed comments will explain each step of the process in detail.

#!/usr/bin/env python3

# A complete, step-by-step script for Llama 3.1 Function Calling

import requests

import json

# --- 1. CONFIGURATION: Set your API Key and other details here ---

# IMPORTANT: Replace "YOUR_META_API_KEY" with your actual API key.

API_KEY = "YOUR_META_API_KEY"

# This is an example URL. Check the official Meta documentation for the correct one.

API_URL = "https://api.llama.meta.com/v1/chat/completions"

# The model we want to use.

MODEL_NAME = "llama-3.1-8b-instruct"

# --- 2. THE "TOOL": Our actual Python function ---

# This is the real-world code that our AI agent will be able to use.

# Llama doesn't run this code; it tells US when to run it.

def get_current_weather(location: str, unit: str = "celsius"):

"""

Gets the current weather for a specified location.

In a real app, this function would call a real weather service API.

For this tutorial, we'll just return some fake, hardcoded data.

"""

print(f"\n>>> EXECUTING TOOL: get_current_weather(location='{location}', unit='{unit}')")

weather_info = {

"location": location,

"temperature": "18",

"unit": unit,

"forecast": "Mostly cloudy with a chance of rain.",

}

# It's best practice to return data as a JSON string.

return json.dumps(weather_info)

# --- 3. THE "TOOL MANUAL": Describing our function for Llama ---

# This is the instruction manual we give to the AI. It explains what our

# tool does, what its name is, and what information (parameters) it needs.

# This is the most critical part of making function calling work.

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g., Boston, MA",

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The unit of temperature to use.",

},

},

"required": ["location"],

},

},

}

]

# --- 4. THE MAIN LOGIC: Putting it all together ---

def main():

"""The main function to run our weather agent."""

# The user's question that should trigger our tool.

user_prompt = "What's the weather like in Dublin, Ireland right now?"

print(f"--- User Prompt: '{user_prompt}' ---")

# The conversation starts with the user's message.

messages = [

{"role": "user", "content": user_prompt}

]

# == STEP 1: Make the first API call to Llama ==

# We send the user's prompt and the list of available tools.

# Llama will decide if a tool is needed.

headers = {"Authorization": f"Bearer {API_KEY}", "Content-Type": "application/json"}

payload = {

"model": MODEL_NAME,

"messages": messages,

"tools": tools,

"tool_choice": "auto" # 'auto' lets the model decide. 'any' would force a tool call.

}

print("\n--- 1. Sending request to Llama to see if a tool is needed... ---")

response = requests.post(API_URL, headers=headers, json=payload)

if response.status_code != 200:

print(f"Error: API request failed with status code {response.status_code}")

print(response.text)

return

response_data = response.json()

first_response_message = response_data['choices'][0]['message']

# We add the model's first response to our conversation history.

messages.append(first_response_message)

# == STEP 2: Check if the model decided to call a tool ==

if not first_response_message.get("tool_calls"):

print("\n--- Llama answered directly without using a tool: ---")

print(first_response_message.get("content"))

return # We're done if no tool was called.

print("\n--- 2. Llama decided a tool is needed! ---")

print(json.dumps(first_response_message['tool_calls'], indent=2))

# == STEP 3: Execute the tool call and get the result ==

# If we are here, it means the model wants to use one of our tools.

tool_call = first_response_message['tool_calls'][0]

function_name = tool_call['function']['name']

# In a real app, you would have a more robust way to map names to functions.

if function_name == "get_current_weather":

# The arguments are a JSON string, so we need to parse them.

arguments = json.loads(tool_call['function']['arguments'])

# Now we call our *actual* Python function with the arguments Llama provided.

tool_output = get_current_weather(

location=arguments.get("location"),

unit=arguments.get("unit", "celsius") # Use a default if not provided

)

# We add the tool's output to the conversation history.

# This tells the model what happened when we ran the tool.

messages.append({

"role": "tool",

"tool_call_id": tool_call['id'],

"content": tool_output

})

# == STEP 4: Make the second API call with the tool's result ==

# Now that we've run the tool, we send the *entire* conversation history

# back to Llama, including the tool's result. Llama will use this

# new information to generate its final, human-readable answer.

print("\n--- 3. Sending tool result back to Llama for a final answer... ---")

second_payload = {

"model": MODEL_NAME,

"messages": messages

}

final_response = requests.post(API_URL, headers=headers, json=second_payload)

final_response_data = final_response.json()

final_answer = final_response_data['choices'][0]['message']['content']

print("\n--- 4. Llama's Final, Context-Aware Answer: ---")

print(final_answer)

# This makes the script runnable from the command line.

if __name__ == "__main__":

main()Start the process by saving this code as weather_agent.py and replace YOUR_META_API_KEY with your actual key then launch it through the terminal using python weather_agent.py.

The script establishes a basic framework which developers can use as a basis to build their own sophisticated AI agents.

Taking Your Llama 3.1 Agent to the Next Level

When users seek information which needs multiple answers, what should they expect? for example

“What’s the weather in Paris and what’s the current stock price of Apple?”

Llama 3.1 demonstrates intelligence by identifying the need for two distinct tools which handle weather information and stock market data.

Rather than forcing you to switch between services, it simultaneously produces both answers within one reply.

The approach functions as if you handed your assistant a shopping list which combines all items instead of requiring separate store visits for each piece.

The API response for this would have a tool_calls list with multiple items in it.

Your code just needs to be ready to loop through them.

# A simple way to handle multiple tool calls

tool_calls = response_data['choices'][0]['message']['tool_calls']

# Create a list to hold the output of each tool

tool_outputs = []

for tool_call in tool_calls:

# ... (logic to get function_name and arguments) ...

if function_name == "get_weather":

output = get_weather(...)

elif function_name == "get_stock_price":

output = get_stock_price(...)

tool_outputs.append({

"tool_call_id": tool_call['id'],

"role": "tool",

"content": output

})

# Now, when you make your second API call, your 'messages' list

# will include all the outputs from all the tools that were run.

For truly advanced applications, you could even run these function calls at the same time (in parallel) to make your agent feel even faster.

Error Handling: What if a Tool Fails?

Real-world APIs do fail. Your company’s server can go down, or someone will request the weather in a non-existent city.

When your function halts, the entire process grinds to a halt.

The ideal practice is to predict mistakes in the code of your tool and give feedback to Llama 3.1.

The model is actually very good at interpreting these mistakes and will create a useful reply to the you.

Here’s what you can do to alter your tool to accommodate this:

def get_current_weather(location: str, unit: str = "celsius"):

try:

# --- Pretend this is a real API call that might fail ---

if location.lower() == "nonexistent city":

raise ValueError("City not found in the weather database.")

# ... (your normal logic to get weather) ...

weather_info = { "location": location, "temperature": "15" }

return json.dumps(weather_info)

except Exception as e:

# If anything goes wrong, catch the error...

print(f"ERROR in tool: {e}")

# ...and return a clear error message as a string.

return f"Error: Could not retrieve weather for {location}. Reason: {e}"If you respond to Llama 3.1 in the tool message with this error message, it will notice the error and may simply reply to the you something like,

“I’m sorry, I could not locate ‘Nonexistent City’ in my weather database. Please check the spelling.”

This makes your agent a lot stronger and smarter.

Managing Conversation History (Multi-Turn Calls)

This is the secret to making an AI that can have a genuine conversation.

An agent that can’t recall what you’ve just been discussing is not very useful.

Here is an example conversation:

YOU: “What is the weather in London?”

Agent: (Uses tool) “The weather in London is 15°C and cloudy.”

YOU: “Okay, and what about in New York?”

In order for the agent to realize that “what about in New York” is asking about weather, it needs the context of the whole conversation.

The answer is easy: never discard your messages list.

On each iteration, you simply append to it.

The list needs to have every your input, every model output (including its choice to invoke a tool), and every tool’s result.

By returning this full history to the API with each new call, you provide the model with a short-term memory, enabling follow-up questions and multi-step, hard tasks without problem.

Also see: AI Music Generator: Can Really Write Good Music?

Your Next Steps with Llama 3.1 Function Calling

There’s a lot we’ve talked about, but the main process is simple and effective. At its heart, you’ve learned a simple six-step dance:

- Define your tool as a standard Python function.

- Describe that tool in a special “manual” that Llama 3.1 can understand.

- Call the API with a user’s prompt and your list of tools.

- Interpret the model’s response when it asks to use a tool.

- Execute your function and send the result back to the model.

- Finalize the loop by receiving a final, context-aware answer.

This is not merely a clever gimmick; it represents a significant change in how we develop applications.

Llama 3.1 function calling connects the model’s extensive knowledge with the real-time, interactive realm.

It serves as the essential component for progressing beyond basic chatbots and crafting truly practical and responsive AI agents capable of scheduling appointments, analyzing live data, controlling smart devices, and much more.

Therefore, the question arises: what will you create? An assistant that helps you organize your calendar?

A platform that condenses the latest articles from your preferred news sources? The opportunities are limitless.

Frequently Asked Questions

1. What’s the main difference between Llama 3 and Llama 3.1 for function calling?

Think of Llama 3.1 as a significant upgrade specifically tuned for tool use. While Llama 3 had some capability in this area, Llama 3.1 is substantially better. The key improvements are higher accuracy (it makes fewer mistakes when picking a tool and its parameters), lower latency (it decides to call a function much faster), and smarter multi-turn conversation skills, making it more reliable for building complex agents.

2. Can Llama 3.1 call functions that require authentication or API keys?

Absolutely, and this is a crucial point. Llama 3.1 does not run your code or handle your secrets. It only tells you which function to run and what parameters to use. Your actual Python function is where you securely handle things like authentication. Your secret API keys for other services (like a weather API or a stock market API) stay safely within your own code, never being sent to the Llama model.

3. Is Llama 3.1 function calling free to use?

The function calling feature itself doesn’t have a separate fee—it’s part of the model’s core capability. However, you do pay for the usage of the Meta Llama 3.1 API, which is typically billed based on the number of “tokens” (pieces of words) you send and receive. It’s important to remember that your tool descriptions and the model’s tool-call responses also count as tokens, so they contribute to the overall API usage cost.

4. How does this compare to OpenAI’s function calling feature?

Both Llama 3.1 and OpenAI’s models offer excellent, mature function calling capabilities, but they cater to slightly different needs. OpenAI’s feature has been around longer and is very well-documented. However, Llama 3.1 is a highly competitive and powerful alternative, often praised for its impressive performance, speed, and more open nature (with model weights available for self-hosting). For developers looking for a top-tier, state-of-the-art model with more flexibility, Llama 3.1 is an extremely compelling choice.