Can you really trust ZeroGPT‘s 98% accuracy? We put the AI detector to the test. See our surprising results on its true performance.

Ever read something online and wondered if a human or a machine wrote it? You’re not alone.

In this age of AI, it’s getting harder to tell the difference between content created by people and content created by AI.

In academic and professional Field, it is now very crucial to have reliable and precise AI-generated content identification tools.

As AI-generated text getting smarter, it makes it much harder to keep academic honesty and make sure that information on the internet is real.

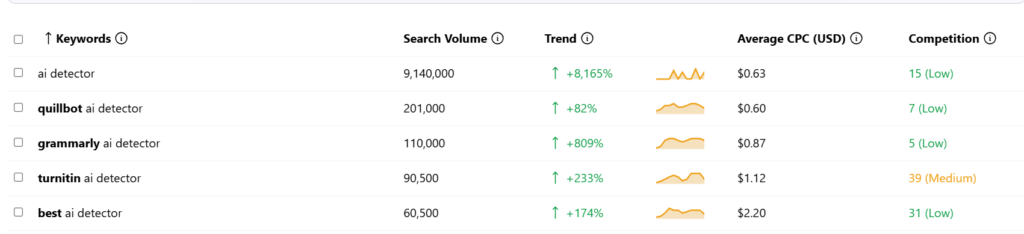

As you can see, according to data from Keywordtool.io more than 9 million people look for “AI Detector” every month on Google search.

I looked for ai detector on google and results are as above image and ZeroGpt on number 2.

ZeroGpt promises to be able to detect between AI-generated and human-written text with an accuracy rate of over 98% for ChatGPT,GPT4 and Gemini etc.

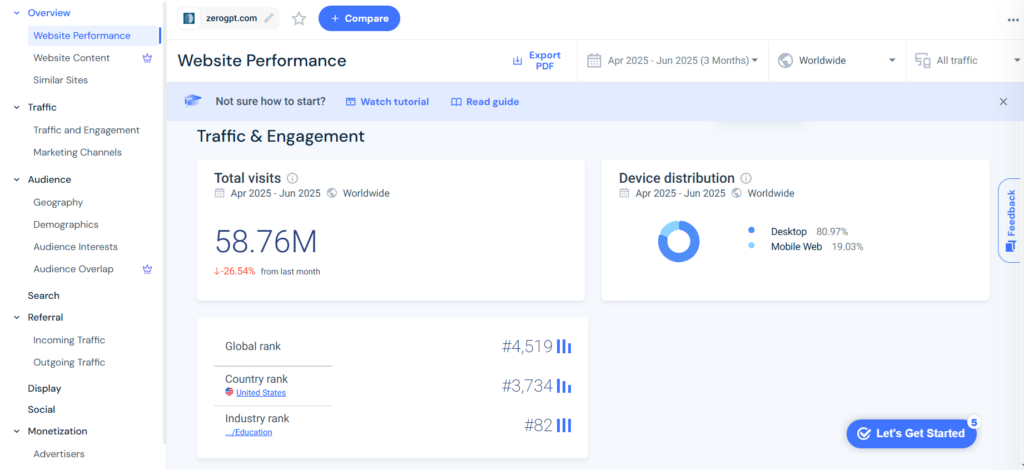

It’s no surprise that tools like ZeroGPT have seen explosive growth. According to SimilarWeb, the platform attracted over 58 million visitors in a single month, with its AI detection tool being the main draw.

Numerous studies and user experiences imply that ZeroGPT’s real-world accuracy is much lower, with some testing placing it between 35% and 65%.

This discrepancy between the company’s claims and the results of independent analysis raises serious questions about the tool’s reliability.

This isn’t just about cheating on an essay; it’s about the erosion of trust in the information we consume daily, from news articles to product reviews etc.

In this article, I will conduct a thorough test on ZeroGPT using real-time examples to assess its accuracy and determine if it is a reliable tool or if its claims are largely speculative.

Table of Contents

The 98% Accuracy Claim: What ZeroGPT Says

The ZeroGPT claim that it can identify AI-generated text with over 98% accuracy.

This is mentioned on the company’s official website and in a number of ratings, some reviews even state that the precision rate is as high as 98.80%.

This high level of accuracy is promoted as a significant part of the application, giving users a reliable technique of identifying the author of a document.

This brave belief is powered by ZeroGPT’s patented “DeepAnalyseTM Technology.“

In order to detect the subtle patterns of AI-generated content, this system reportedly uses complex, multi-stage algorithms to analyze text from both a macro and micro perspective.

According to ZeroGPT, in order to teach its model to effectively differentiate between human-written and AI-generated texts, more than 10 million articles were analyzed.

According to reports, the algorithms are supported by credible written papers and internal experiments.

Students, professors, authors, copywriters, and other professionals who need to verify the credibility of content are among the many people for whom ZeroGPT is created.

Key aspects that make it an approachable tool for a worldwide user group are its language support and user-friendly interface.

The lack of transparency is a major point of contention despite these confident claims and technology that sounds sophisticated.

It is not publicly known what precise methods, datasets, and parameters were employed to reach and validate the 98% accuracy figure.

Users and academics find it tough to totally accept the results in the absence of independent verification since they are unable to examine the technique used to get at them.

Accuracy test for ZeroGPT: The Results

As demonstrated below, we applied the most popular ZeroGPT AI detector to five different text samples, and the results are complicated.

Pure Human Text

Characterized by varied sentence lengths, natural rhythm, and occasional grammatical imperfections. Exhibits personal tone, idiomatic expressions, and conceptual depth. AI detection is typically low.

Pure AI Text (GPT-4)

Demonstrates uniform sentence structure, consistent tone, and high lexical density. Often uses predictable transitional phrases. Lacks personal voice. AI detection is typically very high.

AI Text, Human-Edited

A hybrid style. Retains some AI structure but includes human elements like varied sentence flow, idioms, or corrected terminology. AI detection results are often mixed and unreliable.

Non-Native Speaker Text

May feature unconventional phrasing, simpler sentence structures, or minor grammatical errors. The logic is human, but the expression can be flagged by AI detectors due to pattern deviations.

AI-Humanized Text

AI text processed through paraphrasing tools to mimic human writing. Intentionally introduces varied sentence length and word choice to evade detection. Performance of AI detectors varies greatly.

1. Pure Human Text

- Human Text: “So, I was trying to fix my bike last weekend—what a disaster. I thought it was just a simple flat tire, you know? But then I noticed the chain was all gunked up, and one of the brake pads was completely worn down. It’s funny how you start one small project and it just completely snowballs. My five-minute fix turned into a whole afternoon affair, and honestly, I probably made things worse. Ended up just taking it to the shop. Some things are just better left to the pros, I guess.”

2. Pure AI Text (GPT-4)

- AI Text: “The implementation of quantum computing is expected to revolutionize numerous industries by providing computational power that vastly exceeds the capabilities of classical computers. Its primary advantage lies in its ability to solve complex optimization problems, factor large integers, and simulate molecular structures with unprecedented accuracy. Key sectors poised for transformation include cryptography, materials science, and pharmaceutical research. However, significant challenges remain, such as maintaining quantum coherence and reducing error rates, which are critical for the development of scalable, fault-tolerant quantum systems.”

3. AI Text, Human-Edited

- Human Edited AI Text: “Quantum computing could totally change the game for a bunch of industries. Think about it—it offers processing power that just blows regular computers out of the water. I was reading that its main strength is solving really complex problems, like figuring out drug interactions or creating new materials. But it’s not all smooth sailing. The biggest hurdles right now are keeping the quantum states stable and cutting down on errors. It seems like we’re still a ways off from having a quantum computer on every desk, but the potential is just massive.”

4. Human Text by a Non-Native Speaker

- “For my work, it is requirement to make many analysis of the customer datas. Yesterday, I spended five hours for preparing one report. The software has had many difficult options, and I did a mistake with the filter, so the result was not correct at first. I must to be more careful for the next time. My boss say the report is good in the end, but the process is needing much improvements for efficiency.”

5. AI-Humanized Text

- “It is anticipated that the arrival of quantum computation will fundamentally reshape multiple sectors by delivering processing capabilities far beyond those of traditional computing machines. Its chief benefit is found in its capacity for resolving intricate optimization challenges, factoring sizable integers, and modeling molecular formations with exceptional precision. Nevertheless, substantial obstacles persist, including the preservation of quantum coherence and the mitigation of error frequencies, both essential for creating scalable and reliable quantum frameworks.”

Here is result from ZeroGPT most popular Ai Detector Tool that being frequently cited as top choices.

Pure Human Text

LLM Analysis: ZeroGPT flagged this text with a 28.94% AI score, demonstrating a notable risk of false positives on genuine human writing.

Pure AI Text (GPT-4)

LLM Analysis: The detector performed with perfect accuracy, identifying the unedited AI-generated content with a 100% AI score.

AI Text, Human-Edited

LLM Analysis: A score of 39.18% shows that basic human editing can cause the AI detection rate to plummet, exposing a significant tool vulnerability.

Human Text by a Non-Native Speaker

LLM Analysis: The tool correctly identified this text as 0% AI. This indicates it can differentiate non-standard grammar from AI patterns in this instance.

AI-Humanized Text

LLM Analysis: With a 47.01% AI score, text altered by a paraphraser largely evades detection, suggesting that automated “humanizing” is highly effective.

Simple view,

| Text Sample | ZeroGPT Results |

|---|---|

| Pure Human Text | 28.94% AI Text |

| Pure AI Text (GPT-4) | 100% AI Text |

| AI Text, Human-Edited | 39.18% AI Text |

| Human Text by a Non-Native Speaker | 0% AI Text |

| AI-Humanized Text | 47.01% AI Text |

Note: The Percentage indicating the amount of content Written by AI.

So, what do these test results really tell us about ZeroGPT’s accuracy? Let’s break it down in simple terms.

The most straightforward test was the pure, untouched AI text. As expected, ZeroGPT nailed it, flagging it as 100% AI.

This shows that if someone just copies and pastes directly from a tool like GPT-4, ZeroGPT will probably catch it. It’s very good at spotting the obvious.

However, things get much more complicated from there.

The most worrying result is for the text written by a human. ZeroGPT flagged it as nearly 29% AI. This is a major red flag.

It means that if you write something completely on your own, there’s still a chance the tool could incorrectly label your work as partially AI-generated, potentially causing a lot of unfair trouble.

Now, look at what happened when the AI text was edited by a person or run through an “AI humanizer” tool. The detection rate dropped dramatically to below 50%.

In the real world, a score like 39% or 47% would likely be considered “human” or at least not AI enough to raise an alarm.

This shows just how easy it is to fool the detector with some basic editing or by using a paraphrasing tool. It can’t seem to handle text that’s a mix of human and AI input.

Interestingly, the one surprise was how it handled the text from a non-native English speaker.

It correctly identified it as 100% human-written with a 0% AI score.

This is a good sign, as it suggests the tool isn’t simply punishing writing that has slightly unusual grammar or sentence structure.

In a nutshell, these results show that ZeroGPT is effective in the most ideal, lab-like scenario of pure AI text.

But in the messy real world—where people edit, paraphrase, or just have their own unique writing style—it becomes unreliable.

It can be easily tricked by edited content and, more alarmingly, it can falsely accuse actual human writers.

This suggests that relying on it as a final judge of a text’s origin is a very risky bet.

The Other Side of the Story: A Mountain of Evidence Against Accuracy

While the 98% accuracy figure is certainly an impressive headline, it starts to fall apart under real-world scrutiny.

Independent tests and academic studies tell a much different, more complicated story.

Instead of near-perfection, these analyses show ZeroGPT’s actual accuracy is often somewhere between a much less impressive 35% and 65%.

The biggest problem that keeps cropping up is the issue of “false positives.”

In simple terms, this is when the tool incorrectly accuses a human writer of using AI. This isn’t just a small glitch; it’s a significant flaw with serious consequences.

One academic study found that ZeroGPT wrongly identified a staggering 83% of human-written abstracts as being AI-generated.

Another study found that 62% of human-written papers were incorrectly flagged.

It’s no wonder that online forums like Reddit are filled with posts from frustrated students and writers who have had their original work flagged by the tool, forcing them to prove their own authenticity.

On the flip side, ZeroGPT struggles mightily with content that started as AI-generated but was later edited by a person.

While it’s pretty good at catching raw, untouched AI text—as our own test showed when it scored a pure GPT-4 sample at 100%—its effectiveness plummets as soon as a human steps in.

In our tests, for example, a simple human edit of an AI-generated paragraph caused the detection score to drop to just 39%.

Another test using an “AI-humanizer” tool, which just paraphrases the text, got the score down to 47%.

In a real-world scenario, scores this low would almost certainly fly under the radar.

How Can It Be So Wrong? A Look Under the Hood

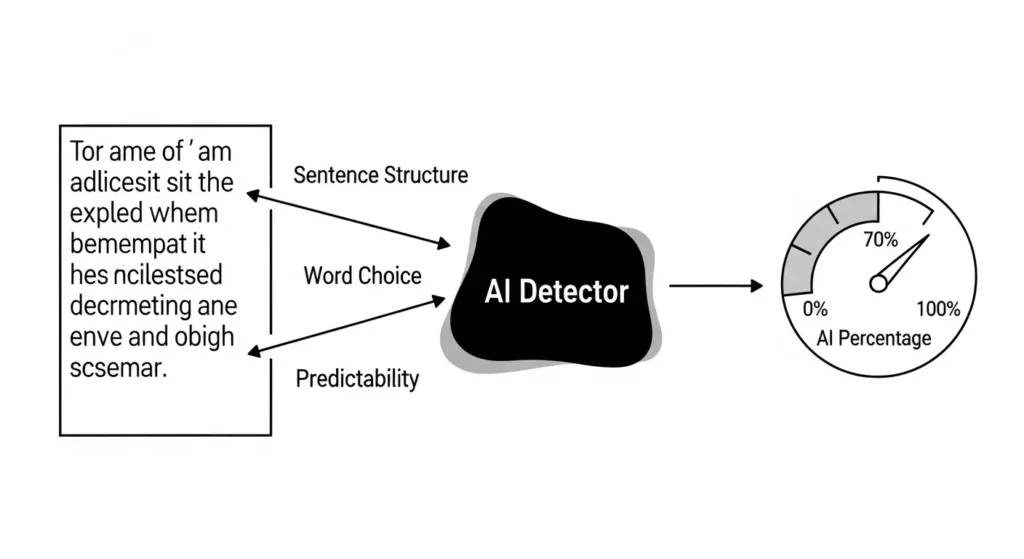

So why does this happen? To understand its failures, you have to know a little about how it works. AI detectors like ZeroGPT are trained to look for patterns.

Think of it this way: AI writing is often very uniform, with predictable sentence structures and a consistent, slightly robotic flow.

The detector analyzes text for these tell-tale signs, looking at things like sentence length variation (or “burstiness”) and word choice complexity (or “perplexity”).

If a text is too perfect and predictable, the alarm bells go off.

The problem is, good human writing can sometimes look like this too, especially in formal or academic contexts.

If you write a well-structured, polished essay with clear, concise sentences, you might accidentally mimic the very patterns the detector is trained to flag.

This is why so many innocent writers get caught in the crossfire.

What makes this even more frustrating for users is the “black box” nature of the tool. When ZeroGPT flags your text, it gives you a percentage but no explanation.

It doesn’t highlight why it thinks your work is AI-generated, which leaves you with no way to understand the result or challenge it.

It’s like being told you’re guilty without ever being shown the evidence.

ZeroGPT in a Crowded Market: How Does It Compare?

When you place ZeroGPT alongside its competitors like GPTZero, Originality.ai, or Copyleaks, a clear picture emerges.

To be fair, ZeroGPT has its strengths. Its interface is clean and easy to use, and it is genuinely effective at one specific thing: identifying raw, unedited AI text that has been copied and pasted without changes.

However, its weaknesses are significant. Its high false positive rate makes it a risky choice for any high-stakes situation, like a teacher checking a student’s paper.

Other tools, while not perfect, often have better track records in this area, showing fewer false accusations.

This is why you’ll see such a mix of reviews online; tests that only use pure AI text might rank ZeroGPT highly, while those that focus on false positives and edited text place it much lower.

Bottom Line: 98% Accurate or 98% Speculation?

So, let’s circle back to the original question.

After looking at the evidence, the 98% accuracy claim feels less like a fact and more like a marketing slogan.

The tool’s consistent struggles with false positives and its inability to reliably detect edited or paraphrased content show that its real-world performance doesn’t come close to that number.

The claim is far more speculation than reality.

For anyone thinking of using this tool, the advice is simple: proceed with extreme caution.

You should absolutely not use ZeroGPT as the final, single source of truth to judge whether a piece of writing is authentic, especially when a person’s academic or professional integrity is on the line.

Think of it as a preliminary screening tool at best—a first step in a much larger process.

If it flags something, your next step shouldn’t be an accusation, but rather a more careful, human-led review.

The best approach is to combine multiple tools and, most importantly, apply your own critical thinking and judgment.

- Never rely on a single tool.

- Use AI detectors as a preliminary check, not a final verdict.

- Prioritize human judgment and critical thinking.

- If a text is flagged, initiate a conversation, not an accusation.

The hunt for a truly foolproof AI detector continues, but for now, ZeroGPT isn’t it.