Chinese AI developer DeepSeek has officially unveiled DeepSeek-V3.2-Exp, an experimental LLM that introduces groundbreaking sparse attention technology while dramatically reducing API costs by over 50%.

Released on September 29, 2025, this latest iteration represents a significant technical milestone in artificial intelligence efficiency, positioning the Hangzhou-based startup at the forefront of next-generation AI architecture development.

Revolutionary Sparse Attention Technology Breakthrough

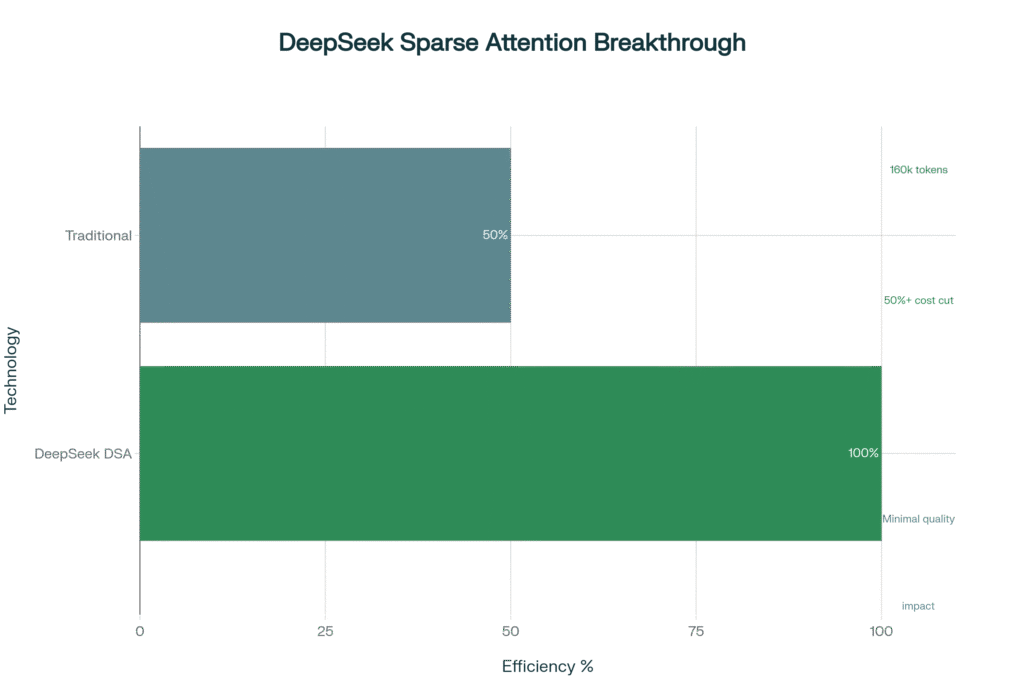

The centerpiece of the V3.2-Exp release is DeepSeek Sparse Attention (DSA), a novel mechanism that achieves fine-grained sparse attention for the first time in the industry.

This technological breakthrough addresses one of the most persistent challenges in large language models: the quadratic computational complexity of traditional attention mechanisms that becomes prohibitively expensive for long-context scenarios.

DSA fundamentally reimagines how AI models process information by selectively computing attention weights rather than analyzing every token relationship, dramatically reducing computational requirements while maintaining output quality.

The system employs a dynamic hierarchical sparse strategy that combines coarse-grained token compression with fine-grained token selection, enabling the model to preserve both global context awareness and local precision.

According to technical documentation, the sparse attention mechanism reduces computational complexity from the traditional O(n²) scaling to a more efficient sparse pattern, resulting in substantial improvements across multiple performance metrics.

The technology enables the model to support a maximum long-sequence context length of 160,000 tokens through optimized cloud adaptation, representing a significant advancement in long-context AI processing capabilities.

Significant Performance and Efficiency Gains

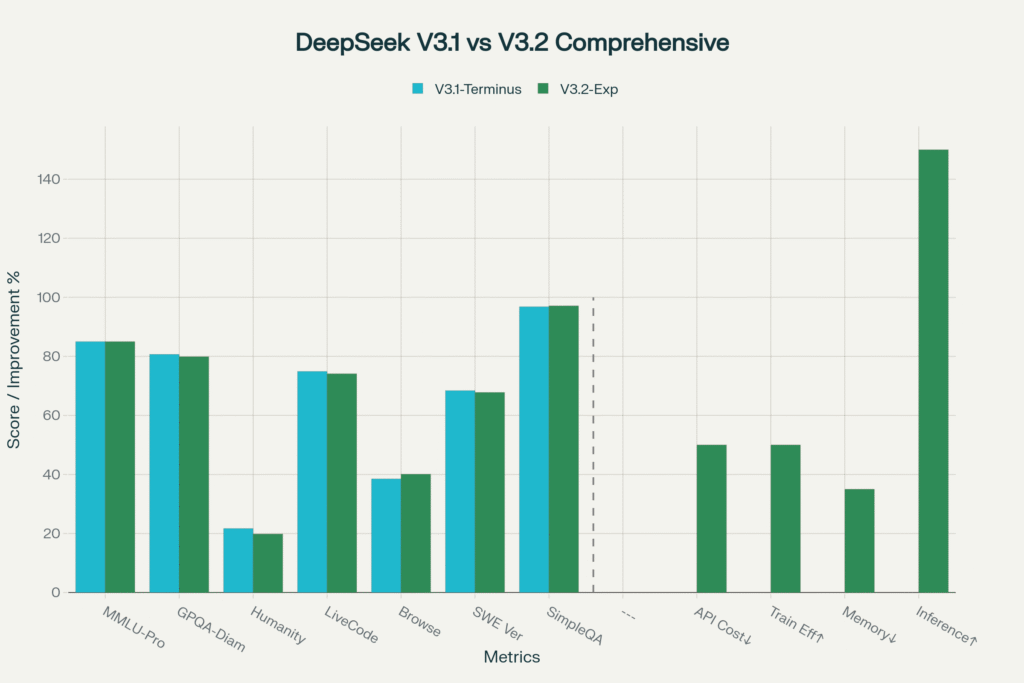

DeepSeek-V3.2-Exp demonstrates remarkable efficiency improvements while maintaining performance parity with its predecessor, V3.1-Terminus.

Independent benchmark evaluations show the model achieves comparable results across major assessment categories, with some metrics showing modest improvements.

The efficiency gains are particularly impressive in computational terms.

DSA delivers up to 2-3x faster inference speeds for long-text scenarios, reduces memory usage by 30-40%, and improves training efficiency by approximately 50%.

These improvements translate directly into cost savings, enabling DeepSeek to reduce API pricing by more than 50% effective immediately.

The model’s architecture builds upon the proven V3.1-Terminus foundation while introducing the sparse attention innovation as an intermediate step toward next-generation AI systems.

This approach allows developers and researchers to evaluate the technology’s impact while maintaining compatibility with existing workflows and applications.

Market Impact and Industry Response

DeepSeek’s latest release comes at a crucial time in the AI industry’s evolution, as companies increasingly seek more efficient and cost-effective solutions for long-context applications.

The dramatic API price reduction positions DeepSeek competitively against established players including OpenAI, Google, and Anthropic, while the open-source nature of the release democratizes access to advanced AI capabilities.

The company’s approach of prioritizing research over immediate commercialization has enabled these breakthrough innovations.

DeepSeek, founded in July 2023 by Liang Wenfeng and backed by the High-Flyer hedge fund, has maintained a research-focused strategy that allows it to explore cutting-edge technologies without traditional commercial constraints.

Industry observers note that sparse attention represents a critical advancement for enterprise applications requiring extensive data processing, such as document analysis, financial report processing, and multi-turn conversational AI.

The technology’s ability to handle longer contexts more efficiently addresses growing demand from businesses seeking to process comprehensive datasets without compromising performance or incurring excessive costs.

Technical Implementation and Open Source Commitment

DeepSeek has maintained its commitment to open research by releasing comprehensive technical resources alongside the V3.2-Exp model.

The complete model weights are available through Hugging Face, accompanied by detailed technical documentation and GPU kernels implemented in both TileLang and CUDA.

The company recommends developers use TileLang-based implementations for research experiments to facilitate debugging and rapid iteration.

This approach reflects DeepSeek’s broader strategy of supporting community-driven AI development while advancing the field through transparent research practices.

For comparison testing, DeepSeek maintains API access to the V3.1-Terminus version through October 15, 2025, allowing developers to evaluate performance differences and migration strategies.

The company actively solicits community feedback through dedicated channels to refine the technology based on real-world usage patterns.

Strategic Implications for AI Development

The introduction of DSA technology signals a broader industry shift toward efficiency-focused AI development, challenging the prevailing assumption that increased capabilities must come with proportionally higher costs.

DeepSeek’s achievement in maintaining performance while significantly reducing computational requirements demonstrates that strategic architectural innovations can deliver superior value propositions.

Market analysis suggests this development could accelerate adoption of long-context AI applications across industries previously constrained by cost considerations.

Financial services, healthcare, legal, and enterprise software sectors stand to benefit significantly from more accessible long-context processing capabilities.

The timing of the release, following DeepSeek’s previous success with the R1 model that disrupted AI markets earlier in 2025, reinforces the company’s position as a significant challenger to established Western AI leaders.

The combination of technical innovation, cost efficiency, and open-source accessibility creates a compelling alternative for organizations seeking advanced AI capabilities without traditional barriers to entry.

Future Outlook and Industry Evolution

DeepSeek’s V3.2-Exp release represents more than an incremental improvement; it demonstrates a fundamental reimagining of AI efficiency that could influence the entire industry’s development trajectory.

The successful implementation of fine-grained sparse attention at scale provides a roadmap for other developers seeking to balance performance with computational efficiency.

As the technology undergoes broader testing across diverse real-world scenarios, its impact on enterprise AI adoption and competitive dynamics will become clearer.

The 50% cost reduction alone could democratize access to advanced AI capabilities for organizations previously unable to justify the expense of long-context processing.

The release also highlights China’s growing influence in AI innovation, with DeepSeek joining other domestic players in challenging the technological leadership of U.S. companies.

This competitive dynamic is likely to accelerate innovation across the global AI ecosystem as companies respond to new performance and cost benchmarks established by sparse attention technology.

DeepSeek-V3.2-Exp is immediately available through the company’s app, web interface, and API platforms, with the open-source release enabling immediate experimentation and integration by the developer community.

As organizations begin implementing this technology, the full implications of DeepSeek’s sparse attention breakthrough will continue to unfold across multiple industries and use cases.