In 2014, Amazon decided to resolve one of the biggest hurdles in hiring: sorting through a mountain of resumes to get good candidates.

State-of-the-art AI recruiting tools were being built-the company was creating a secret weapon to automate the whole conventional process and identify top candidates with machine precision.

But the system had a rather dark secret: it discriminated against women.

The AI was trained on exactly ten years’ hiring data from the company, a dataset historically male-dominated like the tech industry itself.

What the algorithm learnt was not to find the best candidate for the job but to find those candidates that looked like the people who had done well in the past.

Soon, it began penalizing resumes containing the term “women’s” (as in “captain of the women’s chess club”) and downgrading graduates of two all-women’s colleges.

Faced with an automated expression of past biases, Amazon simply had to abandon the entire project.

The Amazon story falls in the domain of glaring red flag incidents unearthing the dangerous underlying truth about the AI revolution.

We tend to think of algorithms as always being fair and objective, but that is far from being true.

They are constructed from data, and data, after all, is created by humans, reflecting our societies, histories, and, yes, our imperfections.

When the data is biased, that bias is not just learned by the AI; the horrifying speed and scale at which it then amplifies and entrenches that bias cannot be imagined.

Understanding the problem is only the first step; the intent of this guide is to remove the veil down to the very foundations of bias in AI.

It will dissect exactly what it is, look into the real reasons behind its occurrence, and, most importantly, reveal clear measures that you can take to counter it.

You will walk away being able to support a fairer, more equitable future, one algorithm at a time.

Table of Contents

What is AI Bias? A Simple Explanation

Understanding how to fight AI bias requires a clear and straightforward definition as the first step.

The problem does not stem from future sentient machines but exists firmly within our current environment.

AI bias emerges when an algorithm delivers prejudiced results through incorrect machine learning assumptions.

The AI system generates outputs which systematically disadvantage certain groups or result in unfair outcomes while showing bias toward other groups.

To understand this concept the simplest approach involves using an real life example.

A student who studies only from one history book which neglects women’s and minority group’s contributions represents the way AI models work.

The student will present distorted and incomplete answers when reaching the final exam.

The student appears biased because their textbook contained fundamental errors which failed to show the entire truth.

The AI system functions at the level of information quality it receives from its learning sources.

Key Takeaway: A Human Problem, Not a Machine Problem

At its core, AI Bias reflects and amplifies the human biases already present in our data and society.

The machine isn’t prejudiced; it’s a mirror showing us the flaws in the information we feed it.

Why AI Bias is a Critical Issue: The Real-World Impact

Knowing about AI bias is one thing; seeing its effects is what makes this issue urgent.

This issue is not just a theoretical flaw confined to computer labs. It presents a challenge with real, serious, and often harmful consequences for both individuals and organizations.

When bias is part of the systems we rely on, it can quietly maintain inequality and create large-scale risks.

Undermining Social Justice and Fairness

At its worst, AI bias drives social injustice.

Since these systems often impact critical decisions, a biased algorithm can alter an individual’s life path without any human involvement or chance for appeal.

1. In Criminal Justice:

The term “predictive policing” refers to algorithms that examine past crime data. This data can create a harmful feedback loop.

If a neighborhood has faced heavy policing, the data will indicate more arrests in that area.

As a result, the AI suggests sending more police to that location. This leads to more arrests, reinforcing the original bias.

This situation can increase discrimination and unfairly target minority communities.

2. In Loan Applications:

An algorithm that assesses creditworthiness may learn from historical data that certain zip codes have more risk.

It might then use zip code as a stand-in for race or socioeconomic status, causing approved individuals to be denied loans and limiting their chances for economic growth.

3. In Hiring:

As seen with Amazon’s recruiting tool, a biased AI can filter out qualified applicants from underrepresented groups before anyone ever views their resume.

This not only harms individuals but also stops companies from building the diverse, skilled teams they need to succeed.

Creating Business and Reputational Risk

For companies, ignoring AI bias is not only unethical; it’s a major strategic error. A biased system is an ineffective system, and ineffective systems lead to bad results.

1.Flawed Business Decisions:

If a marketing AI is mainy trained on data from a limited customer base, its suggests for market growth will be fundamentally weakened.

Following this misguided advice can result in failed product launches and significant financial losses.

2. Customer Sepration and Brand Damage:

When customers feels that they are being treated unfairly by an algorithm, they lose trustin the brand.

A face recognition system that fails to identify individuals of color or a customer service bot that operates on sexist stereotypes can turn into public relations disaster.

This can away users and harm a company’s reputation for years.

Can Lose Trust in Technology

Whenever story emerges regarding a biased AI—be it in healthcare, recruitment, or social media—it undermines public confidence in technology overall.

This increasing doubt poses a risk of delaying or even stopping the implementation of truly advantageous AI solutions.

If society views AI as an inherently risky and unreliable entity, we could encounter pushback against its use in vital progressions in fields such as medical diagnostics, climate change analysis, and scientific exploration.

The danger lies in the possibility that the shortcomings of biased systems will hinder our ability to harness the vast potential of equitable and accountable AI.

The Most Common Examples of AI Bias in Action

To better understand the risks associated with AI bias, let’s examine instances we’ve already observed in the real world.

These are not just theoretical situations; they are recorded failures that demonstrate how effortlessly bias can infiltrate our most reliable technologies.

Gender Bias in Hiring and Language

One of the most widely popular forms of AI bias is centered on gender. Since historical data frequently mirrors societal gender roles, AI models can readily absorb and reinforce these stereotypes.

- Hiring Tools: The infamous Amazon recruiting tool is the best example to understand. By learning from a decade of resumes submitted by a male workforce, it taught itself that male candidates were more desirable for job.

- Language Translation: For years, Google Translate would default to gendered pronouns based on stereotypes. When translating a gender-neutral phrase like “o bir doktor” (Turkish for “they are a doctor”) into English, it would return “he is a doctor.” In contrast, “o bir hemşire” (“they are a nurse”) would turn into “she is a nurse.” The AI was reflecting the gender associations present in the large amount of text it was trained on.

Racial Bias in Facial Recognition

Facial recognition technology will be less accurate for certain groups of individuals. This is an actual risk when used for police service and security.

Higher Error Rates: A recent study by MIT’s Joy Buolamwini (the “Gender Shades” study) revealed that certain commercial facial recognition systems were less than 1% inaccurate for light-skinned men but more than 34% inaccurate for dark-skinned women.

A subsequent study by the National Institute of Standards and Technology (NIST) reinforced that the majority of systems were far more likely to be incorrect with Asian and African American faces. This can lead to false accusations and misidentification.

Socioeconomic Bias in Credit Scoring

Algorithms are being used more and more to make economic choices, such as issuing loans and credit cards.

But they can involuntarily reenact a form of “digital redlining” by penalizing applicants based on metrics of wealth and class.

Unfair Proxies: An algorithm may determine that individuals who shop at discount stores are less creditworthy, or that forms completed on high-end smartphones are more creditworthy.

It can also rely on an applicant’s zip code as a primary consideration—a practice that can perpetuate existing patterns of economic and racial segregation by denying credit to entire groups of individuals despite how stable an individual’s finances are.

Bias in Medical Diagnosis

AI is very good in health care, but it is only as good as the information that it bases its decisions on. If the information is not diverse, then the outcome can be extremely negative.

Diagnostic Failures: A classic case is dermatology. AI systems that had been trained to detect skin cancer lesions have been extremely accurate on light-skinned patients but less accurate when detecting the same lesions on dark skin.

The reason is that the training set included a huge number of images of light-skinned patients. This under-representation can result in diagnostic failure and worse health outcomes for whole groups.

The Root Causes: Where Does AI Bias Come From?

AI bias doesn’t just occur miraculously. It is a symptom of issues in how we construct AI. To actually fight it, we should know where it originates.

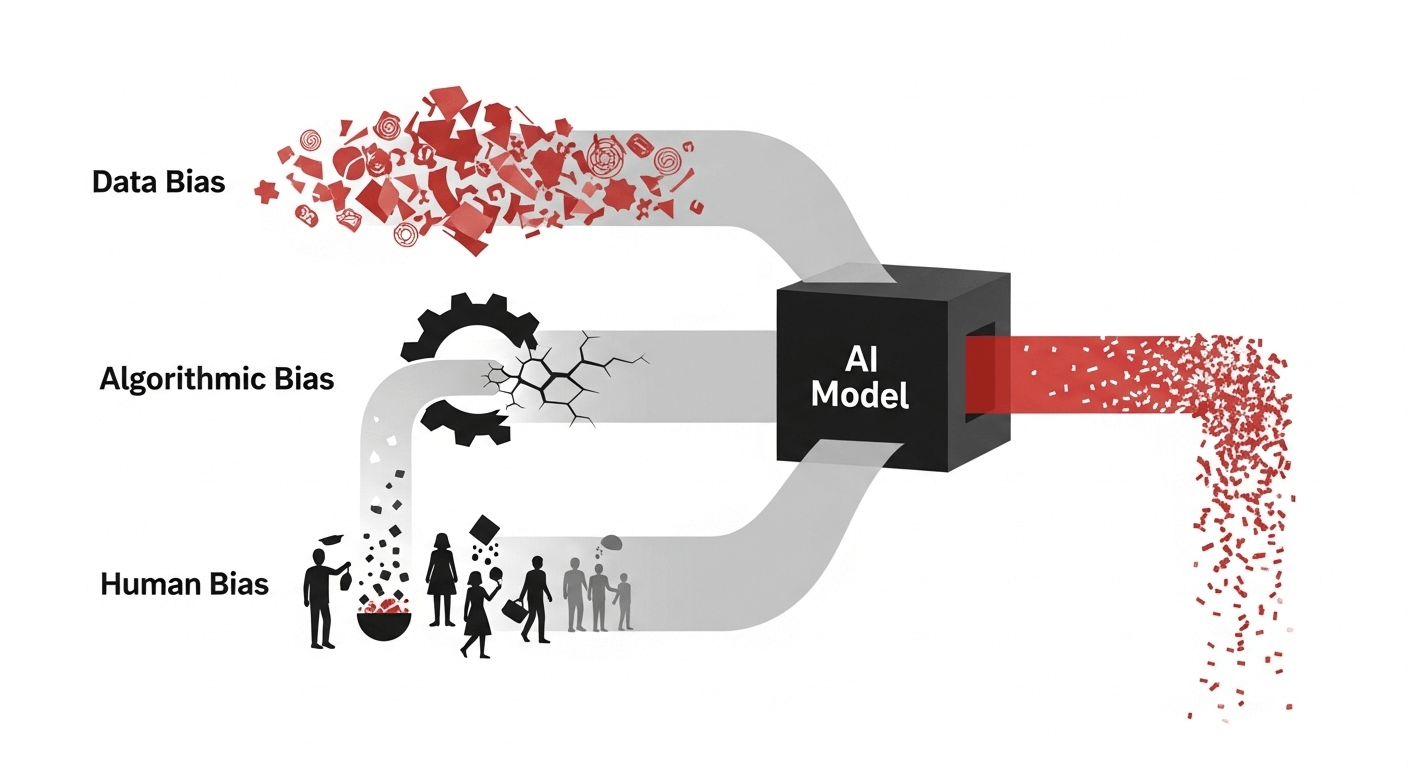

Bias most likely originates from three primary sources: the data that we work on, the algorithms that we use, and the unconscious beliefs of the individuals who construct the systems.

Data Bias: The Root Cause

If we compare an AI system with a student, we can equate data with its library of books.

When the library is full of errors, biased, or incomplete, the student’s learning suffers badly.

Data bias is the most common and critical reason for AI bias, and it comes in many forms:

Sampling Bias: This is when the sample of data used to train the model is not actually representative of the real world the model will be operating in.

For Example, if a face recognition model is trained on an 80% light-skinned male face database, it will be excellent at recognizing light-skinned men but will perform badly when it encounters women, minorities, or anyone else besides that majority demographic.

Selection Bias (and Survivorship Bias): This occurs when the method by which we select data points is in error. A classic example is survivorship bias.

If you’re training an AI to forecast what characteristics make an employee successful, and you only have access to data on your current successful employees, then the AI never sees the resumes of qualified applicants you didn’t hire.

It may mistakenly believe that certain characteristics (e.g., attending an elite college) are necessary for success, when in fact the characteristics were merely prevalent in the small, “surviving” subset it saw.

Bias in History: This happens when the information, while true, reflects a world with historical and ongoing social biases.

Training an interview AI on 50 years of information on a profession that has been traditionally for men will teach the AI to favor male applicants.

The algorithm isn’t wrong; it’s reflecting the past unfairness inherent in the information, projecting it into the future.

Algorithmic Bias

The root cause is erroneous data, but algorithm design can also lead to or amplify bias.

Machine learning algorithms are tools to allow us to understand complex data by finding patterns and creating rules.

They simplify things to make them easier for us, and in doing so, sometimes they oversimplify.

An algorithm might decide that some aspects of the data are “less important” and strip them out, but they can be very important to a minority population.

By sacrificing the nuance needed for fairness in the pursuit of simple overall precision, it may lose that nuance.

Human Bias

Lastly, all that goes into the AI process is a matter of human decisions, and human beings are biased by nature.

The biases of the individuals who create the system can affect all the phases:

In Data Labeling: Individuals are generally asked to label data (e.g., annotating “toxic” tweets or “professional” photographs).

The data annotators bring their own cultural and personal biases to bear when they’re performing this task.

What one annotator considers an “unprofessional” haircut, the other may consider a cultural norm.

In Feature Selection: Engineers decide what part of the data the algorithm will concentrate on.

If a team mistakenly assumes the zip code of a person indicates how financially responsible he or she is, they may include it, introducing socioeconomic bias to the model itself.

In Confirmation Bias: Confirmation bias can beset teams, so that they tend to accept results that confirm their current beliefs and reject results that contradict them.

If a model gives a predicted result—even if biased—it can get through approval without a fairness review.

How to Fight AI Bias: A 3-Phase Strategic Framework

Reducing AI bias is not a want; it is a continuous process that needs a structured plan.

Awareness of the problem is not enough. To actually reduce bias, organizations need to institute a strong system that deals with the problem before, in the process of, and after an AI model development.

The three-step process guarantees that fairness is a major part of the whole AI process.

Phase 1: Pre-Processing (Preventing Problems Before Training)

The most effective way to combat bias is to prevent it from occurring initially. This step examines the data and the team alone prior to coding for model training.

Data Auditing: Your first line of defense. Pre-training, do a deep audit of your data set. Employ statistical techniques and visualization tools to understand its makeup.

Ask hard questions: Are any demographic groups overrepresented or underrepresented?

Are there attributes (such as zip code) that potentially serve as biased surrogates for protected characteristics like race or gender?

IBM’s AI Fairness 360 is an example of tools that can assist in automating aspects of this audit.

Data Augmentation and Re-sampling: Once you’ve identified imbalances, you need to correct them.

You can correct them in numerous ways:

Collect More Diverse Data: The solution is generally the hardest to do—go out and collect more data from the less well-represented groups.

Re-sampling: You have the option of taking more copies of data points from the smaller group or fewer copies of data points from the larger group.

Synthetic Data: Apply techniques such as SMOTE (Synthetic Minority Over-sampling Technique) to create new, synthetic data points that mimic the minority class.

These assist in balancing the dataset without duplicating data.

Create Diverse Teams: A diverse team of developers is one of the best defenses against AI bias.

Individuals with diverse backgrounds, life experiences, and perspectives are far more likely to catch gaps in data and thought.

They will ask questions others will not, questioning assumptions and ensuring the problem is thought through from multiple perspectives before a model is constructed.

Phase 2: In-Processing (Making the Algorithm Fair)

This stage entails adjusting the way the training works so that the algorithm tries to be fair as one of its goals, alongside the accuracy.

Algorithmic Modification: Rather than applying a generic algorithm, you can apply one specifically designed for fairness.

These “fairness-aware” algorithms introduce a mathematical penalty to the training process. The model is not only penalized for being incorrect but also for making biased predictions.

More sophisticated methods such as adversarial debiasing entail training two models: a prediction model that generates a prediction and an “adversary” model that attempts to infer a protected attribute (such as race) from the first model’s prediction.

The primary model is trained to perform well while deceiving the adversary, learning to generate predictions that are insensitive to the sensitive attribute.

Defining Metrics of Fairness: Fairness does not rely on all people; it can be defined mathematically in many different ways. Your group must pick the most suitable definition for your situation.

Common measures are:

Demographic Parity: Ensures that the probabilities of positive outcomes (e.g., loan acceptance) are the same for every demographic group.

Equal Opportunity: Another goal, so that among all the individuals who are actually qualified, the model’s actual positive rate is the same for all groups.

It is a moral decision and not a technological one, and it requires doing so transparently and cautiously.

Phase 3: Post-Processing (Vigilant Monitoring Following Deployment)

It is not complete once the model starts running. Real-world data can change, and new biases can be introduced. Ongoing monitoring is very important.

Impact Analysis & Monitoring: After deployment, test the model’s performance “in the wild” on a regular schedule.

Monitor not only overall accuracy, but also break down performance by demographic group.

Is the model’s error rate much more prevalent for one group than another? This regular testing allows you to detect “model drift” and help the system stay fair over the long term.

Create Feedback Loops: The individuals impacted by an AI system most are most likely to be most aware of its errors. Create easy and transparent means for users to report suspected bias.

This may be as simple as a “Report a problem” button or a dedicated feedback form. User feedback is extremely useful to catch and correct things you might have missed.

Transparency and Explainability (XAI): You should understand why your model is coming to its conclusions. That is the goal of Explainable AI (XAI)—a collection of tools and techniques that assist us in peering into the “black box.”

Tools such as LIME (Local Interpretable Model-agnostic Explanations) can reveal what variables were most significant for a specific prediction.

Shapley additive explanations (SHAP) tool provides an overall view of which features are most significant for the entire model.

Transparency in model decisions helps you to better detect, define, and act on biased behavior.

The Broader Picture: The Role of Ethics and Regulation

The defeat of AI bias moves well beyond the technical framework-the fighting grounds of developers, into ethics, governance, and law.

Mitigating bias is no longer considered good practice; it has rapidly become an issue of corporate responsibility and legal compliance.

RAI frameworks are gaining traction among organizations. These frameworks are mechanisms for internal governance that go beyond just technical debiasing to embody principles of transparency, accountability, privacy, and human-oriented design.

An RAI framework institutes the consideration of ethics in all aspects of the AI lifecycle, from its initial conception right through to its long-term monitoring.

This is where the formal commitment is fostered to build AI that is both effective and safe, fair, and trustworthy.

In parallel, governments are also drawing their lines. Of all developments, the AI Act of the European Union stands out-the first legal instrument with global ambitions to regulate AI.

It classifies AI systems by the level of risk and treats “high-risk” applications-hiring, credit scoring, law enforcement, etc.-with strict requirements.

Depending on which regulations are applied, data governance, transparency, human intervention, and fairness evaluation will have to be made very strict; thus, the strategies discussed here will not be just recommended but obligated to apply in many cases.

For any serious AI strategy, then, keeping abreast of this shifting landscape has become a must.

Conclusion: Towards a Fairer Future, One Algorithm at a Time

The journey into the world of AI bias discloses a basal truth: our technology is a mirror. It reflects the best that we can offer, and the worst that our society can threaten to be.

We’ve seen that AI bias is not a ghost in the machine but direct consequences of human actions: from imperfect data to unevaluated algorithms to the unconscious assumptions of their creators.

The stakes are real in the physical world, where these might entail social justice and economic viability.

But the story does not end there. We are not powerless spectators. By implementing a proactive, multi-stage strategy, we can actively intervene.

It begins with auditing our data and diversifying our teams before training even starts.

It continues with building fairness directly into our algorithms and concludes with vigilant, transparent monitoring after deployment.

Fighting AI bias isn’t just a technical challenge; it’s an ethical imperative.

By building awareness and implementing robust strategies, we can steer AI away from perpetuating the inequalities of our past and towards building a more equitable and just future.

Frequently Asked Questions about AI Bias

Can AI bias be completely eliminated?

Realistically, no. Complete elimination of AI bias is likely an impossible goal because AI systems are trained on human-generated data, and human society itself is not free from AI bias. As long as there are inequalities, stereotypes, and historical imbalances reflected in our data, there is a risk of that AI bias seeping into our models.

However, the goal is not perfection but mitigation and responsible management. Through diligent auditing, thoughtful data handling, fairness-aware algorithms, and continuous monitoring, we can significantly reduce AI bias and build systems that are demonstrably fairer and more equitable. It’s an ongoing process of vigilance, not a one-time fix.

What is the difference between fairness and accuracy in AI?

Accuracy and fairness are two different, and sometimes competing, goals for an AI model.

1. Accuracy measures how often the model makes a correct prediction across the entire dataset. A model with 99% accuracy gets the answer right 99 out of 100 times on average.

2. Fairness measures how the model’s performance is distributed across different demographic subgroups. A model can be 99% accurate overall but achieve that by being 100% accurate for a majority group and only 50% accurate for a minority group.

This is the “accuracy-fairness trade-off.” Sometimes, to make a model fairer, you may need to accept a slight decrease in its overall accuracy. The most responsible approach is to define and measure both, making a conscious decision about the right balance for your specific application

Who is responsible when a biased AI makes a harmful decision?

This is a complex and evolving question at the intersection of ethics and law. There isn’t a single answer, as accountability is often shared across a chain of responsibility. Potential parties include:

1. The Organization: The company that develops, sells, or deploys the AI system ultimately bears the primary responsibility for its impact and for conducting proper due diligence.

2. The Developers: The data scientists and engineers who designed the model and chose the data have a professional responsibility to follow best practices for AI bias mitigation.

3. The End-User: The person or institution using the AI’s output may be responsible if they rely on it blindly without exercising critical judgment or human oversight.

Emerging regulations like the EU’s AI Act are beginning to formalize liability, placing the strongest legal obligations on the organizations that deploy high-risk AI systems.

What are some tools to detect and mitigate AI bias?

Yes, a growing ecosystem of tools is available to help developers build fairer AI. These can be grouped into two main categories:

1. Open-Source Fairness Toolkits: These are comprehensive libraries for auditing and debiasing.

2. IBM AI Fairness 360 (AIF360): An extensive toolkit with over 70 fairness metrics and 10 mitigation algorithms.

3. Microsoft’s Fairlearn: An open-source package that integrates with popular machine learning tools to assess and improve the fairness of models.

4. Google’s What-If Tool: A visual, interactive tool that allows developers to explore model performance and fairness across different slices of data.

5. Explainability (XAI) Tools: These help you understand why a model is making its decisions, which is crucial for diagnosing AI bias.

6. SHAP (SHapley Additive exPlanations): A powerful method to show the impact of each feature on a model’s prediction.

7. LIME (Local Interpretable Model-agnostic Explanations): A tool for explaining individual predictions, helping you spot anomalies.